AI Governance

Safety Measurement and Audit

Cognera AI provides safe AI solutions addressing potential risks associated with advanced AI, such as unintended behaviors, ethical concerns, and the alignment of AI systems with human values and interests. AI safety aims to develop methodologies and practices to manage and mitigate the potential risks, ensuring that AI technologies contribute positively to society while minimizing potential negative impacts:

Risk Auditor: evaluating potential risks associated with AI.

Reliability & Robustness: rigorous tests including adversarial and regression at different conditions to evaluate vulnerability and weakness of AI systems.

Ethical Validator: establish and monitor ethical baselines that AI models and features must adhere to.

Establish ethical guidelines for the use of AI, addressing issues like bias, discrimination, and the impact on society.

Regularly review and update ethical policies based on feedback and evolving ethical standards.

Explainability Inspector: auditing transparency and explainability of AI systems.

Safety Alignment Meter: measuring alignment of AI systems’ objectives.

Ensure that AI models are interpretable and provide explanations for their decisions.

Use techniques like Explainable AI (XAI) to make the decision-making process understandable to users.

Promote transparency in the development process, sharing information about algorithms and data sources.

Continuous Safety Monitoring

Privacy Inspector: inspects privacy and security of AI outputs.

Implement robust data encryption to protect sensitive information.

Adhere to privacy regulations and ensure user data is handled securely.

Regularly audit and update security protocols to address emerging threats.

Bias Inspector: scrutinizing AI outputs for any potential bias.

Conduct thorough bias assessments to identify and rectify biases in training data.

Implement techniques such as fairness-aware machine learning to reduce and monitor biases in AI predictions.

Regularly audit models for fairness and take corrective actions if biases are identified.

Robustness and Resilience:

Test AI systems against a variety of scenarios to identify vulnerabilities.

Implement mechanisms to handle adversarial attacks and unexpected inputs.

Regularly update and improve models to adapt to evolving threats and challenges.

User Consent and Control: Provide users with clear information about how AI will use their data.

Allow users to have control over their data and the ability to opt-out of certain AI-driven features.

Ensure transparent communication about the purpose and capabilities of the AI system.

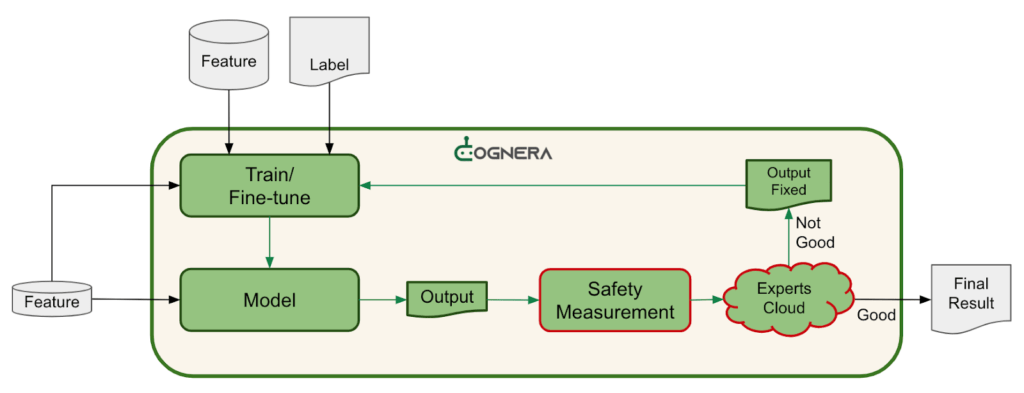

Human Experts in the Loop

For sensitive tasks, where the AI output must be monitored, Cognera utilizes a cloud of experts in real-time to ensure the AI output is Safe and Accurate. The human-in-the-loop concept (HITL) is employed to ensure that human oversight, guidance, and decision-making are incorporated into the AI processes.

Cognera AI uses this process for In-Context Learning in Foundational Models Pillar.

Here’s how HITL is applied for AI safety:

Training and Validation

Humans are involved in the initial training and validation of AI models. They play a crucial role in curating and annotating training datasets to ensure data quality and relevance.

Human experts review and assess the model’s performance during training, identifying potential biases, errors, or areas for improvement.

Algorithmic Decision Making

In critical decision-making scenarios, especially those with potential ethical implications, a human is involved in the decision-making process alongside the AI system.

Human oversight ensures that decisions align with ethical standards, regulatory requirements, and societal norms.

Anomaly Detection and Error Correction

Humans are responsible for monitoring AI system outputs for anomalies, errors, or unexpected behavior.

In case of errors or uncertainty, the system can alert a human operator who can intervene, correct the issue, and provide feedback to improve the model.

Handling Edge Cases:

Humans are often better equipped to handle rare or novel situations that the AI system may not have encountered during training.

Human input is valuable in addressing edge cases, ensuring that the AI system doesn’t make incorrect or potentially harmful decisions in unfamiliar scenarios.

Continuous Feedback Loop:

Establishing a continuous feedback loop involving human input helps improve the AI system over time. Humans provide feedback on system performance, identify areas for enhancement, and contribute to iterative model updates.

User Interaction and Interpretability:

Human-in-the-loop designs prioritize user interaction and interpretability. AI systems should be designed to explain their decisions, and humans should have the ability to question or override AI decisions when necessary.

Ethical Considerations:

Human oversight is essential for addressing ethical considerations associated with AI, such as bias mitigation, fairness, and adherence to ethical guidelines.